As the adoption of capacitive touch interfaces expands rapidly, the use of conventional mechanical keys with limited functions is effectively being swapped for them. Control panels with user-friendly designs can now be operated using actions such as finger touches and swipe motions. Capacitive touch technology allows even devices that have been designed for complex and highly skilled operations to be controlled with intuitive finger movements. Performing tasks through finger movements and hand gestures has become a reality. As gesturing is one of the natural ways humans communicate, it is a logical outcome to adopt gesture control when interacting with smart end products. Intelligent sensor-driven gesture recognition is now achievable due to vast developments in embedded compute devices such as microcontrollers and microprocessors with integration at the Endpoint.

The applications of touchscreens now extend far beyond one’s typical smartphone usage to more robust and safety-critical industrial applications. Having an intelligent surface that knows what one wants by simply sensing the force of a finger can come in quite handy, as even the AutoML and other embedded AI/ML tools are transitioning towards touch-based drag and drop analytics.

Gesture recognition processes are designed to improve human-computer interaction and can occur in a variety of ways, such as through touch screens, cameras, or peripherals. Gesture recognition is also part of Touchless User Interface (TUI) based applications, which implies they can be controlled without touch. Vision-based gesture recognition technology employs a camera and a motion sensor to track the user's movements and translate them in real-time. Motion sensors in devices can track and interpret gestures as their primary input source. Hand detection and gesture recognition should use the hand for Human-Machine Interaction (HMI) without touching, switching, or using a controller. In terms of human-computer interaction, gesture control systems are the most intuitive and natural. They evolve continuously, depending on the sensors employed to capture gestures. A noteworthy instance of gesture recognition is the AI virtual mouse system, which can track the fingertips of gestures through the built-in camera or webcam, perform mouse cursor operations and scrolling functions, and move the cursor with it. Based on gestures, the system can be controlled virtually. In addition to this, using gestures assists in regulating and monitoring safety critical and hard to access devices where hand detection can be applied to augmented and virtual reality environments, sign language recognition, gaming, and other use cases.

Embedded gesture recognition processes are a touchless way of Human-Machine Interaction (HMI). They are designed to improve human-computer interaction and can be accomplished through touch screens, cameras, or peripherals. At present, both gesture recognition and touch are being employed for Human-Computer Interaction (HCI) or Human-Machine Interaction in the case of the Internet of Things (IoT).

In terms of Human-Machine Interaction, gestural control systems are considered the most intuitive and natural. Hence, their development is constantly evolving depending on the sensors used to capture gestures. In this context, gesture recognition has grown from an intuitive to a more formal recognition based on the improvements from experiments on sensors used for this purpose.

Artificial Intelligence (AI) and machine learning techniques pave the way to perform smarter tasks during real-time analysis and present the best results of human-machine interaction. Meanwhile, IoT provides the infrastructure for applying these tasks. AI provides the capability for decision-making through machine learning algorithms, whereas IoT provides connectivity and data sharing ability. The combination of AI and IoT builds more capabilities such as learning based on user interactions, service providers, and other relevant devices on the network.

Artificial Intelligence (AI) can support enhanced gesture recognition and touch, by assisting to determine what the user intends to select on the screen at the start of the on-screen action, thereby speeding up the interaction. For example, in the experiment of driving simulations, AI achieved a reduced time and effort by up to 50% because of the ability to predict the user’s intended target at the start of a hover action. Case studies further indicate that accuracy can also be achieved by AI in gesture-based IoT device selections.

To ensure that electrical and RF noise issues are addressed effectively, selection of the right compute embedded device and overall HW design is the key to build a solution that supports gesturing and touch. Accuracy is an important issue in such solutions, which can be provided with the right hardware as a starting point. Advanced cameras, smartwatches, wearable sensors, or data-transfer gloves are types of gesture and touch devices as well as the best tested AI algorithms. For this reason, Renesas offers an amazing set of different types of hardware that can be the best choice in many use cases.

As the capacitive touch and gesture operation scene expands, panel sensitivity and high-noise resistance have become key requirements in achieving accurate control movements and sophisticated operational performance. There is also a demand for features involving environmental tolerance to water, dirt or temperature variations. In addition to these requirements, development time and costs are some of the challenges that need to be addressed.

Renesas offers a revolutionary design for controlling devices and equipment with our 2nd generation capacitive touch solution. We provide a user-friendly environment that lowers the entry barrier in capacitive touch and 3D gesture recognition solution development.

e-AI × 3D Gesture Recognition – Renesas Solution for Every Application

The “3D gesture recognition” feature of Renesas QE tool for Capacitive Touch supports the development of gesture applications with the use of embedded AI. The development of AI applications involves many complex development steps where Renesas QE tool support enables embedded engineers to develop AI applications without the need for specialized AI skills.

Enable "3D gesture recognition"

There are three core functionalities that can be adopted to develop effective and intelligent gesture applications.

1. Recording

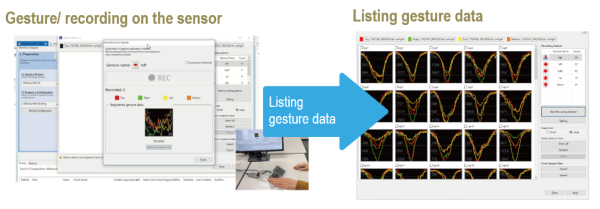

Gesture data can be registered simply by performing the gesture that you want the AI to recognize on the capacitive sensor. The registered data is displayed in a list supporting the deletion of data from failed gestures and an importing feature.

2. AI Generation

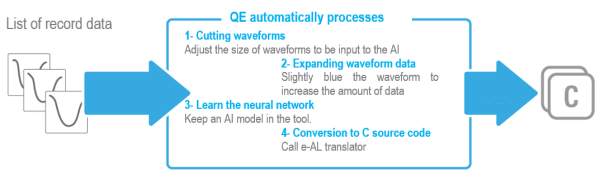

During the processes of creating the AI (data pre-processing, deep learning, and conversion to C source code), the Renesas QE tool automatically proceeds with optimum processing for gestures. Although automating AI creation is usually challenging, this can be achieved by focusing the target on the gesture.

3. Monitoring & Tuning

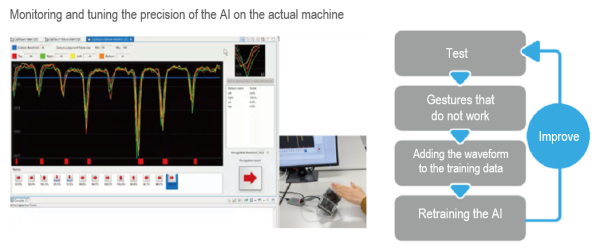

You can monitor and tune the precision of the created AI on the actual machine. If a gesture has not been correctly recognized, you can immediately add the waveform to the training data by clicking on the [Add Data] button on the monitoring screen of the Renesas tool to give feedback to the AI algorithm.

As we move forward, the technology evolution is addressing some of the gesture recognition precision challenges. These include low quality of data arriving from sensors, typically due to their bulkiness and poor contact with the user, and the effects of visually blocked objects and poor lighting. Further challenges arise from the integration of visual and sensory data as they represent mismatched datasets that must be processed separately and then merged at the end which is inefficient and leads to slower response times.

The journey ahead is an exhilarating one as the demand for enhanced intelligent touch and smart gesture recognition solutions are expanding with breakthrough applications across consumer and industrial segments. For further information visit our Capacitive Touch Sensor Solutions page.