Overview

The AI compiler we are developing is software that can generate high-performance executable code for Renesas’ R-Car device from a pre-trained deep neural network.

Background

Performing CNN inference in real-time is very challenging work because embedded hardware faces severe restrictions in the hardware resources of computation and power consumption. In order to perform CNN inference effectively on an R-Car V series device, Renesas designed the heterogeneous architecture, which is divided into a programmable processor (CPU) and the accelerators dedicated for computing each layer of the network.

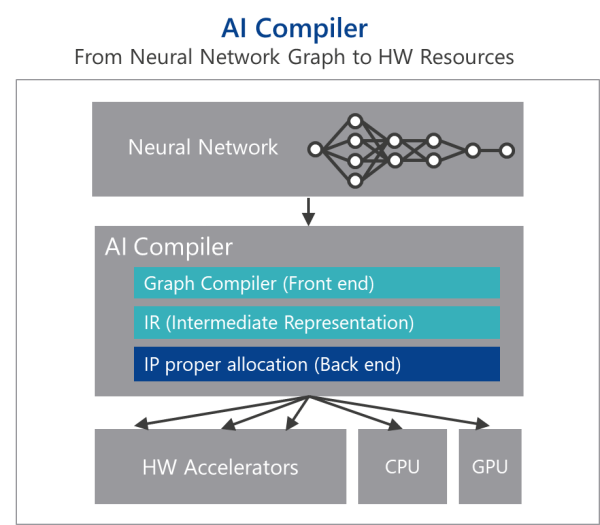

As for AI compiler, the common software architecture contains two parts: the compiler “front end” and the compiler “back end” as shown in the figure. Deep neural networks are translated into multi-level IRs in the AI compiler. The compiler front end is responsible for hardware-independent transformations (graph IR) and graph optimizations while the compiler back end is responsible for hardware-specific optimizations, and code generation.

Examples

Renesas is mainly developing the hardware-dependent optimization algorithm, by making maximum use of the heterogeneous architecture of the R-Car V series. In order to improve performance further, it is necessary to understand the latest papers related to deep neural network and have technical discussion with engineers. We are seeking well-motivated engineers in this area.

Conclusion

Deep neural network is one of the technology fields which has been extensively studied in recent years and continues to evolve. Renesas will provide the advanced AI tool to assist the development of autonomous driving technology.