在灾害救援、安全防护以及医疗护理等领域,识别人声尖叫至关重要。 想象一下,当你被困在电梯里,而常规通讯方式已经失效。 此时,一套尖叫声检测系统能够识别你的求救尖叫信号,并立即启动应急响应,例如通知安保人员或触发警报,从而迅速提供援助,挽救生命。

瑞萨的 Reality AI 尖叫声检测是一款专门用于识别人类尖叫声的机器学习模型。 这款模型并不仅仅识别高音量的声音,而是经过充分训练,能够从各种背景噪声中准确辨别出真正的求救尖叫声。 与此同时,该系统还能够实现救援力量的即时派遣,这在封闭或隔离、对安全性要求极高的环境中尤为重要。

尖叫声检测是如何实现的?

尖叫声检测模型基于采集到的音频数据进行训练,从而学会区分不同类型的声音。 该机器学习模型的开发步骤如下:

- 采集与训练数据: 训练模型首先从大量音频数据的采集开始。 需要使用包含多种真实环境音频样本的公开数据集*。 其中的 “Scream (尖叫)” 类别包含强烈的非语言尖叫声和带有言语的尖叫声,这些数据被用于训练模型识别尖叫信号。 为了让模型准确判断哪些声音不是尖叫声,在训练中还加入了多类非尖叫声音,如风声、环境噪声、对话声、歌声、音乐声和鼓掌声等,以提升模型的区分能力。

- 提取特征: 下一步,从音频文件中提取关键声学特征,帮助模型在复杂噪声环境中识别出尖叫声特有的特征信号。

- 训练模型: 在确定了最佳的特征后,使用机器学习分类器对模型进行训练,使其能够区分“尖叫”与“非尖叫”音频。 训练过程中不断调整模型参数,来降低识别误差并提升模型整体性能。

通过以上方法,可以构建出一个高效的尖叫声检测系统,确保应急响应迅速可靠,为多种应用场景提供关键的安全保障。

应用示例

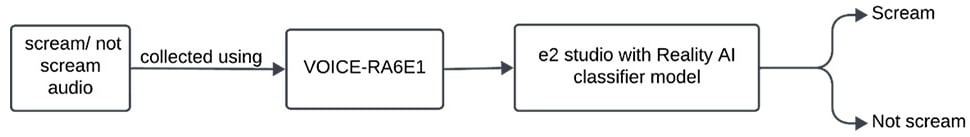

从真实环境中采集的音频信号被用于构建瑞萨 VOICE-RA6E1 语音用户演示套件。 这些音频随后由瑞萨 Reality AI Tools 训练的分类模型进行处理,用于判断声音是否属于尖叫声。

在实际测试中,瑞萨的尖叫声检测模型在距离测试板 2 米以内,对尖叫声的识别准确率达到了 90% 以上。 测试环境中还加入了风声、电梯音乐、对话声、婴儿哭声和电话铃声等背景噪声,以验证模型在复杂环境下仍能准确识别求救尖叫。

轻松构建应用示例

用户可以使用瑞萨的 e² studio IDE 采集音频信号,并集成由瑞萨 Reality AI Tools 生成的 AI 模型。 从公开数据集*采集数据后,可使用 Reality AI Tools 完成提取特征、训练模型,并将最终模型部署为 C 代码。

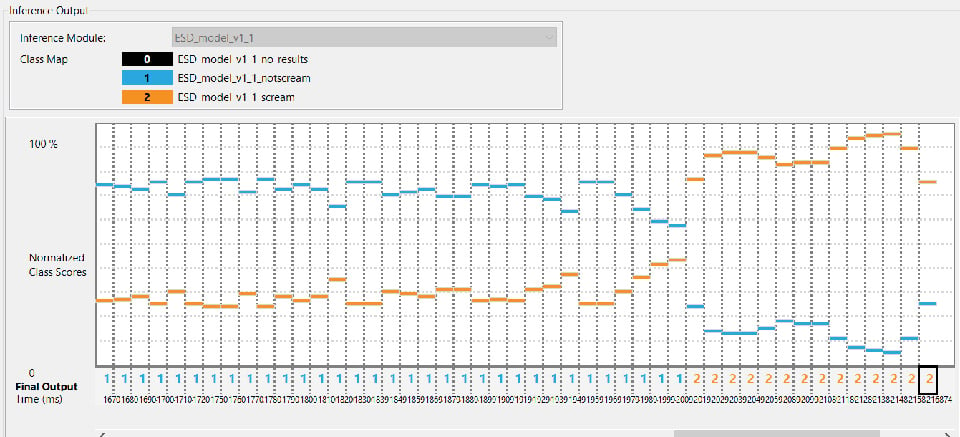

部署完成后,模型可在 e² studio IDE 中进行实时测试。 集成后,用户可以使用 VOICE-RA6E1 开发板在真实环境中对模型进行充分验证,并通过 AI 实时监视器 (AI Live Monitor) 可视化测试结果。

体验瑞萨 Reality AI Tools 与 e² studio IDE 在模型训练、部署和测试中的无缝且快速集成能力。

总结

Reality AI 尖叫声检测应用展示了机器学习在提升多场景安全性的巨大潜力,同时也展示了用户如何利用瑞萨技术,将先进的提取特征、训练和部署模型与实时响应能力相结合。 可扩展的 Reality AI Tools 转换工具能够为多种瑞萨 MCU 和 MPU 设备生成机器学习模型。

请下载示例项目文件并开始评估。 现在就体验 Reality AI Tools 的试用版本吧。

*公开数据集 – Google AudioSet 数据库