Driving the Future of Automotive AI: Meet RoX AI Studio

In today’s automotive industry, onboard AI inference engines drive numerous safety-critical Advanced Driver Assistance Systems (ADAS) features, all of which require consistent, high-performance processing. Given that AI model engineering is inherently iterative (numerous cycles of 'train, validate, and deploy'), it is crucial to assess model performance on actual silicon at every step of product development. This hardware-based validation not only strengthens confidence in model engineering decisions but also ensures that AI solutions are reliable and meet the target KPI for deployment into in-vehicle AI applications through the product lifecycle.

Meet RoX AI Studio, designed specifically for today's innovative automotive teams. With RoX AI Studio, you can remotely benchmark and evaluate your AI models on Renesas R-Car SoCs within your internet browser (Figure 1), all while leveraging a secure MLOps infrastructure that puts your engineering team in the fast lane toward production-ready solutions.

This platform is a cornerstone of the Renesas Open Access (RoX) Software-Defined Vehicle (SDV) platform, offering an integrated suite of hardware, software, and infrastructure for customers designing state-of-the-art automotive systems powered by AI. We're dedicated to empowering products with advanced intelligence, high-performance, and an accelerated product lifecycle. RoX AI Studio enables you to unlock the full potential of next-generation vehicles by embracing a shift-left approach.

Transforming Product Engineering with RoX AI Studio

The modern vehicle is evolving into a powerful, intelligent platform, requiring automotive companies to accelerate development, testing, and optimization of AI models that enhance safety, efficiency, and in-vehicle experiences. Are you ready to take your automotive AI development to the next level? Meet RoX AI Studio, our cloud-native MLOps platform that revolutionizes this process by bringing the hardware lab directly to your browser. This virtual lab environment enables teams to concentrate on unlocking innovative capabilities, eliminating delays and expenses often associated with traditional infrastructure setup and maintenance. With RoX AI Studio, you can begin your AI model journey immediately, ensuring that your development process starts on day one.

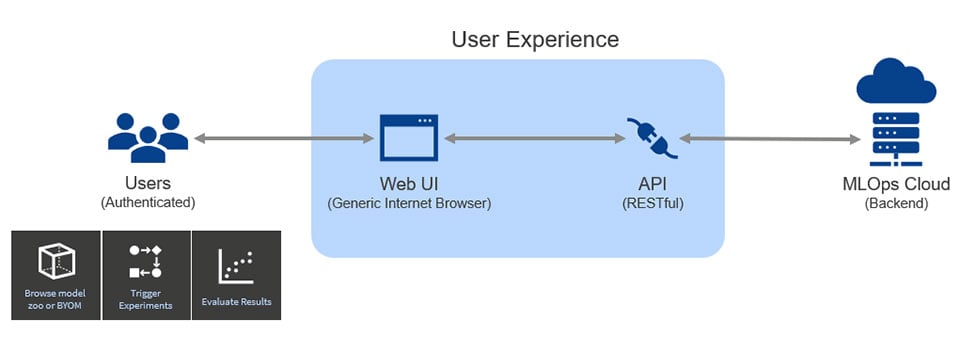

Delve into the platform architecture of RoX AI Studio (Figure 2), mapping each component to customer-ready valued solutions.

User Experience (UX) with Web UI and API

The RoX AI Studio Web UI , serves as a web-native graphical user interface that streamlines management and benchmarking/evaluation of AI models on Renesas R-Car SoC hardware.

Web UI

Through this front-end product, users can register new AI models, configure hardware-in-the-loop (HIL) inference experiments, and conduct benchmarking and performance evaluations of their models, all within a browser environment.

API

The API bridges the Web UI with MLOps backend, facilitating robust communication and data exchange. It is designed to ensure high performance and strong security. The API consists of a broad set of endpoints that collectively enable a wide range of functions, including user management, model operations, dataset management, experiment orchestration, and HIL model benchmarking/evaluation. By decoupling the client from backend complexity, the client API enables rapid integration of new features and workflows, supporting continuous improvement and innovation for evolving customer needs.

The streamlined architecture of the RoX AI Studio Web UI and API empowers users to quickly engage with their tasks, leveraging their preferred browser for immediate access (Figure 3). This approach eliminates barriers to entry, enabling each user to start working on model registration, experiment setup, and evaluation instantly, without delays or the need for specialized client software.

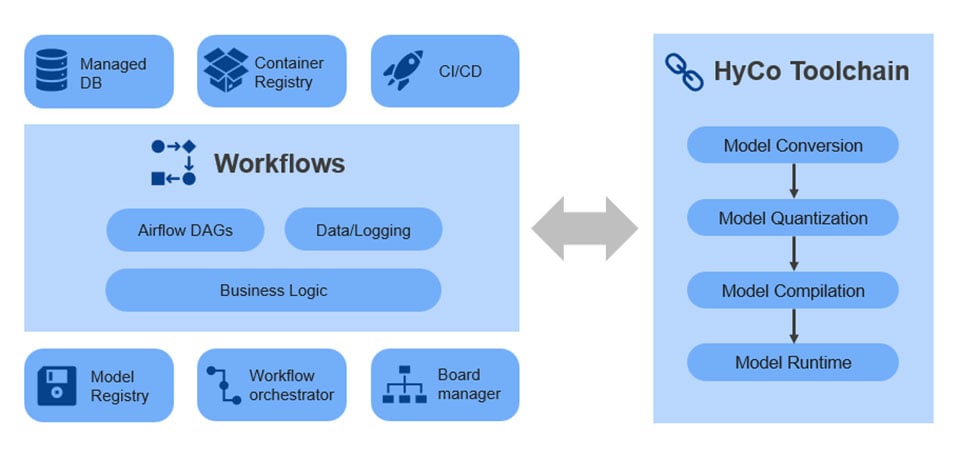

MLOps with Workflows and HyCo Toolchain

The API endpoints in RoX AI Studio are underpinned by robust MLOps business logic, which ensures reliable execution for every incoming API request. Each experiment initiated through the platform follows a systematic and predefined sequence of steps. These steps are organized as Directed Acyclic Graphs (DAGs) and orchestrated using Apache Airflow, a proven workflow management tool.

Workflows

Apache Airflow manages the queuing, scheduling, and concurrency of experiment tasks automatically, allowing the system to efficiently handle multiple simultaneous user requests with finite computational resources on the cloud. The backend architecture leverages a suite of MLOps and third-party microservices, each deployed as Docker containers or coupled through third-party API. This design separates the execution of individual intermediate steps from the overarching control plane, which is governed by the DAG workflows. Such separation provides greater flexibility, enabling the platform to scale dynamically across distributed cloud computing environments and adapt to fluctuating user demands.

Moreover, this approach promotes more granular product development for each microservice. By supporting out-of-the-box (OOB) execution for individual components, RoX AI Studio enables rapid iteration and targeted enhancements, aligning with evolving platform requirements and user needs. Each workflow incorporates model management, data management, and experiment management, powered by Model Registry, Managed DB, and Board Manager.

HyCo Toolchain

Custom layers and operators are increasingly prevalent as AI model architecture continues to evolve. To address this opportunity, a high-performance custom compiler known as HyCo (Hybrid Compiler) is offered specifically for the R-Car Gen4 product line. HyCo has a hybrid compiler architecture, comprising both front-end and back-end compiler components, to ensure scalability and adaptability for custom implementations. At the core of this approach, TVM functions as a unifying backbone, enabling seamless integration of customizations in the front-end compiler with accelerator-specific back-end compilers. This design supports efficient compilation and optimization tailored to heterogeneous hardware accelerators within the SoC.

HyCo is seamlessly integrated into a developer-oriented HyCo toolchain, also referred to as AI Toolchain. Beyond the compiler itself, AI Toolchain provides interfaces for ingesting open-source model zoo assets as well as BYOM assets, encompassing both pre-processing and post-processing software components. This approach demonstrates how an AI toolchain can integrate with customer-specific model zoos, enhancing flexibility in deploying diverse AI workloads. Within the MLOps framework, various configurations of the AI toolchain are containerized into independent microservices. This modular approach emphasizes robust integration within MLOps workflows, allowing for the deployment of standalone AI toolchain components that can dynamically scale in cloud environments.

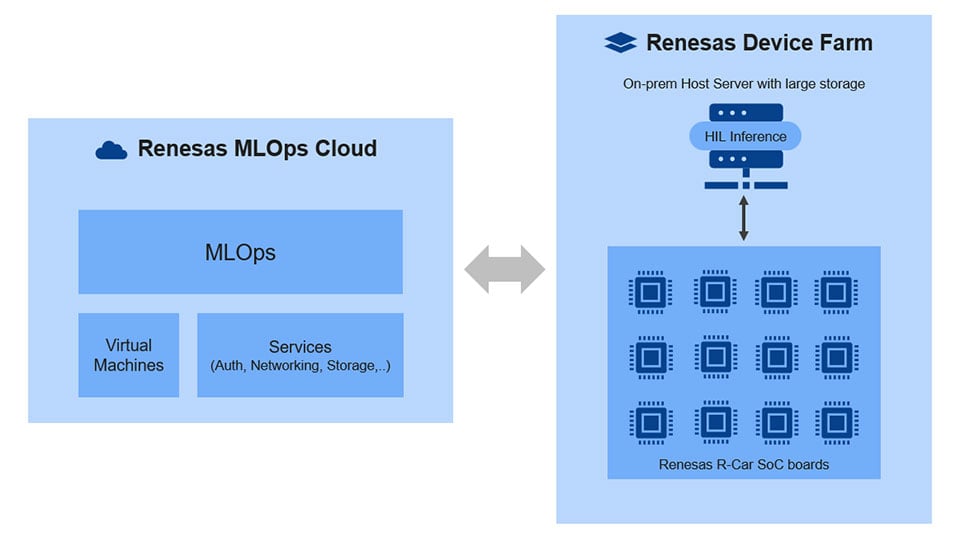

Infrastructure with MLOps Cloud and Device Farm

The hybrid infrastructure enables comprehensive end-to-end MLOps workflows, seamlessly delegating HIL inference tasks to Renesas Device Farm. Currently, the MLOps cloud platform is hosted on Azure, but its architecture is designed to support flexible deployment across other public or private cloud environments in the future.

MLOps Cloud

By utilizing a workflow-based MLOps architecture, we can securely enable multiple users within a single tenant to share computational resources, optimizing capital expenditure. This approach empowers customers to develop AI products without the need for significant individual investment for each developer. The architecture is also built to support seamless integration with private customer clouds, accommodating custom hardware configurations (such as CPU and GPU servers and shared bulk storage) alongside robust on-premises security infrastructure.

Renesas Device Farm

A secure on-premises device farm hosts multiple R-Car SoC development boards, providing the foundation for hardware-in-the-loop (HIL) inference experiments essential for AI model benchmarking and evaluation. The cloud-based Board Manager microservice efficiently handles board allocation, setup, and release, streamlining resource management and eliminating the need for direct developer involvement. The MLOps workflow leverages the device farm to execute HIL inference experiments without common delays associated with traditional board provisioning, updating, and maintenance. A robust networking architecture ensures secure HIL inference sessions for users, maintaining the integrity and confidentiality of both data and AI models.

What Advantages does RoX AI Studio bring to the customers?

- Faster Time-to-Market: Shift-left your AI product lifecycle. Start model evaluation and iteration early, long before our silicon gets delivered to your labs!

- Managed, Scalable Infrastructure: Forget about maintaining costly labs. RoX AI Studio delivers scale, security, redundancy, and automation out of the box.

- Effortless Experimentation: Register your own models (BYOM), spin up inference experiments, and compare results easily—all through a simple dashboard.

- Collaborate with Confidence: Centralized, cloud-based access lets distributed global teams work together seamlessly on model benchmarking and evaluations.

Imagine a world where your AI engineers are instantly productive, your teams collaborate without boundaries, and your prototypes move from idea to reality faster than ever before. With RoX AI Studio, that world is already here!

Sign up for a hands-on demo of RoX AI Studio on your journey to intelligent, efficient, and safe software-defined vehicles.